Core Banking Integration with Salesforce

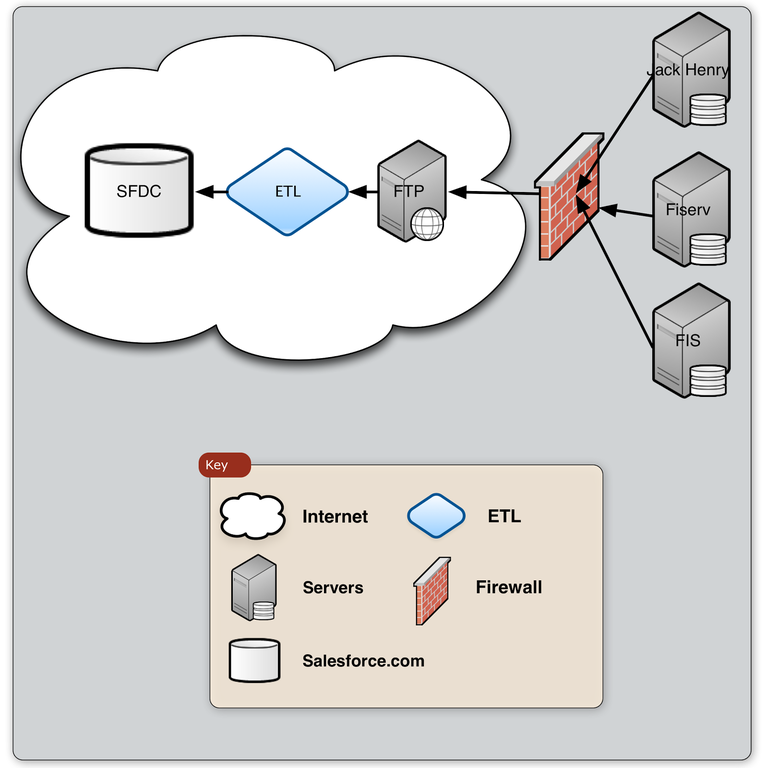

Integration can mean a lot of things from a simple custom link to a real time movement of data between two different systems. In the following use cases we are going to look at the integration of banking cores such as Jack Henry, Fiserv and FIS into Salesforce; discussing some of the pitfalls and best practices along the way.

Integration Types

The first area to dig into are the two types of typical integrations. Real time integrations are where the two systems are sharing data as fast as they can. An example of this would be in the account opening process where a bank wants a newly closed won opportunity to send a realtime message to the core banking solution to establish the new financial account. Data items like the account number and customer data coming directly from Salesforce. This could also be done the other way, originating from the banking core to Salesforce, with the limitations usually on the banking cores lack of accessibility to programming interfaces.

The second, and more popular integration type, is in batch. This is where a large volume of data is moved from one system to the other in a scheduled and automated fashion. For example, a bank might want to populate client data from their core into Salesforce so they will build a batch process to extract the data from the core, transform it to fit into the new Salesforce data model and load it using the Salesforce APIs. This would typically be scheduled to happen once or twice a day and while the data is not real time, for a customer relationship management purpose, it is usually sufficient. This process of extracting, transforming and loading (ETL anyone?) brings us to the tools of the trade.

Tons of Tools

Any integration is going to need some tools but picking the best one for the job can be a lot to manage. The first thing to consider is the use of what I would call "flat loaders" such as the Salesforce.com Data Loader or free tools such as Jitterbit. For these, the data coming from the core has to be well organized and singular in nature. For example, each file needs to contain only one type of data (Clients) and not need any transformation (formatting, splitting or other complex manipulation). While most banking cores have tools to get the data out, it is usually not as clean as it needs to be including lots of duplicates and mixed data. This is when you would need a bigger tool (queue JAWS score).

The next step up (and it is a big step) is the Extract Transform Load (ETL) software from such providers as Informatica, Jitterbit, Talend, Actian and Boomi. These are either cloud or on-premise tools that have built in connectors allowing for complex logic such as grabbing a set of files from an SFTP site, sorting, de-duping and transforming the data and then upserting it into Salesforce in a brand new data model. These tools come with drawbacks of extra expense ($500 a month and up) and complexity but will provide better overall support and handling of larger data sets. Not a must, but highly recommended.

New Data Model

The next hurdle is the complex nature of core banking data that is originating from a traditional database schema but needs to end up in Salesforce which only supports some basic database concepts. Two issues to keep in mind is the many-to-many relationship of banking products to customers in that a customer can have many banking products (Savings, Checking, Debit) but those products also can have many different customers attached to them (Signers, Owners, Primary, Secondary, Account Holders, Organizations). Building the correct data model will make any data integration much easier.

The second consideration is complexity of different types of entities such as clients, customers, prospects, organizations, trusts, businesses and households. While some, such as clients seem like easy matches to Salesforce.com Contacts, others, like Trusts or Housesholds, are not as clear cut. Depending on the data source and business objective the answers to some of these questions can be handled at the core or on platform. For example, here at Arkus we have built out a custom householding application that runs on the Salesforce1 platform and does the work of much older, slower, and expensive householding applications. That, though, is a blog post for another time.

Process Flow & Record Ownership

The last consideration is the flow and ownership of the data. Most banking cores don't have a concept of data ownership so matching that up with Salesforce, where every record must be owned by a Salesforce licensed user, can be a challenge. Best practice is to work with the business to figure out where record ownership matters (Opportunities) and where it might not matter at all (Households) than working that into the integration logic.

It is also important to take into consideration the flow of the data from the core into Salesforce and what is editable. In a daily batch example, any data element that is coming from the core should be locked down in Salesforce as any changes will just be overwritten during the next run. The tip here is to use record types and page layouts to control the different fields and when they can and cannot be edited.

There is a lot to consider in doing a core banking integration and while it is certainly not impossible, the more upfront thought and planning that is put into it can lead to a greater level of success.

If you have questions, suggestions or other tips on doing large scale integrations feel free to post them below in comments, in the Success Community, on our Facebook page or to message me directly at @JasonMAtwood.